Reimagining the SDLC in the Age of Agentic AI

Table of Contents

ToggleFrom Waterfall to Autonomous — How Agentic AI, Backed by Alpha Net, Is Disrupting Software Delivery

TL;DR (Executive Summary)

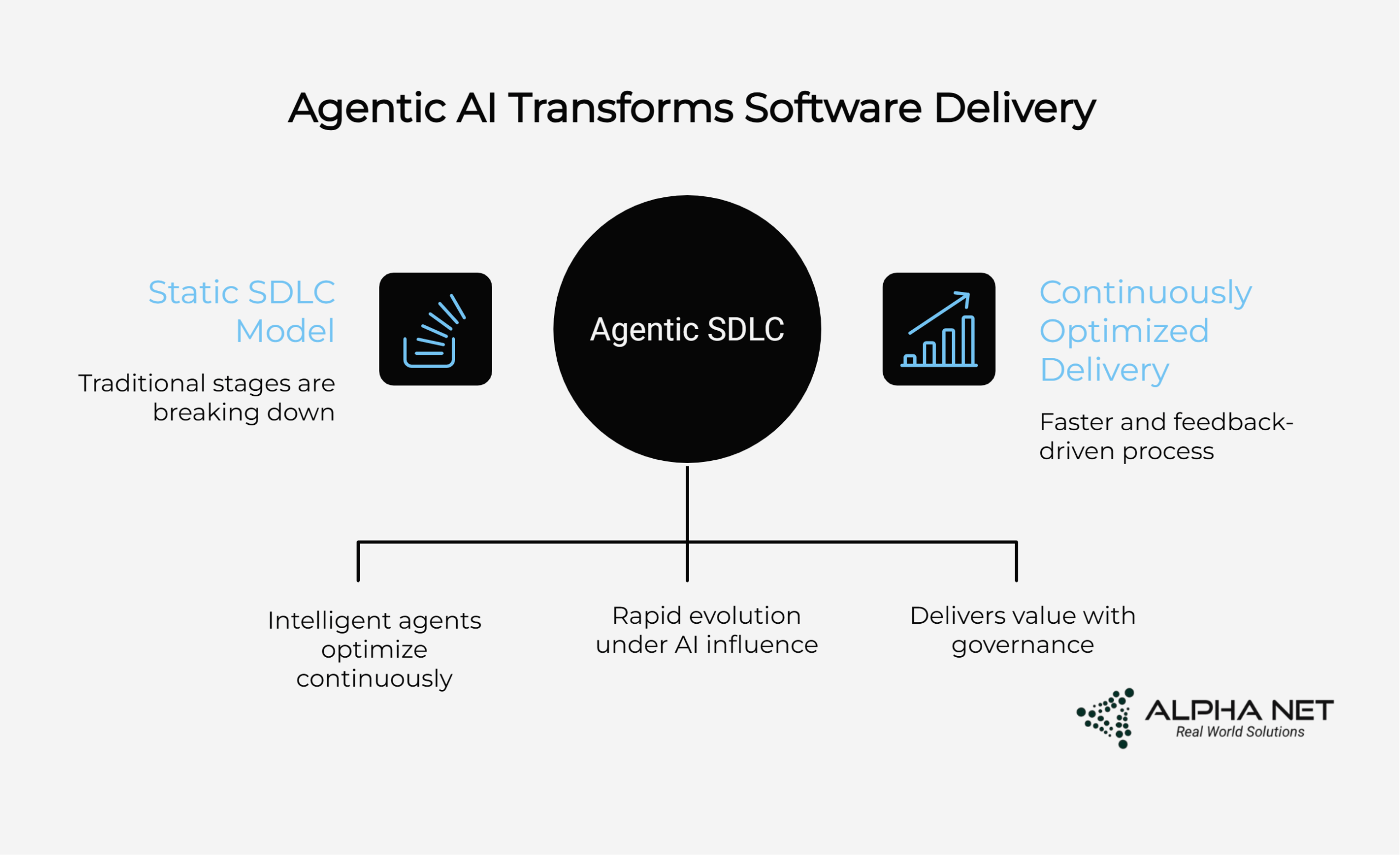

Software development as we know it is transforming. The traditional SDLC (Software Development Life Cycle) stages—requirements, design, development, testing, deployment, maintenance—are evolving rapidly under the influence of Agentic AI. This blog explores how each phase is being disrupted, why static SDLC models are breaking down, and what a new Agentic SDLC looks like—faster, feedback-driven, and continuously optimized by intelligent agents. Alpha Net’s tailored approach ensures this shift delivers real value with governance, structure, and results.

I. Introduction: The SDLC Is Showing Its Age

Traditional software development lifecycles have long been characterized by linear, gated processes that require significant human effort at each stage. Despite the evolution to Agile and DevOps methodologies, these approaches are increasingly strained in modern AI-enhanced environments. The fundamental limitation is clear: conventional SDLCs were designed for human-to-human collaboration, not human-machine partnerships.

As Microsoft noted in their developer blog, “We didn’t get into software to babysit CI pipelines or chase down null pointers. We became developers to invent—to take an idea, will it into existence, and watch as it comes alive.” Yet today’s developers spend countless hours on repetitive tasks rather than creative problem-solving.

The emergence of Agentic AI is fundamentally changing this dynamic. Software is increasingly co-created with AI, not just coded by humans, requiring us to rethink our development processes from the ground up.

II. Agentic AI Defined (Quick Recap)

Before diving deeper, let’s clarify what makes Agentic AI different from traditional automation or generative AI tools:

Agentic AI refers to autonomous systems capable of perception, action, memory, and reflection. These AI agents can proactively analyze situations, make decisions, and take action based on objectives, constraints, and feedback loops. They operate with a degree of self-governance, adapting to dynamic environments and optimizing workflows with minimal human intervention.

Unlike simple code generators or completion tools, agentic systems can:

- Work across multiple tools and environments

- Maintain context and memory between interactions

- Learn from previous outcomes

- Take initiative rather than just responding to prompts

This combination of capabilities makes Agentic AI particularly transformative for software development processes that have traditionally relied on human coordination and decision-making.

III. Traditional SDLC vs Agentic SDLC: A Phase-by-Phase Comparison

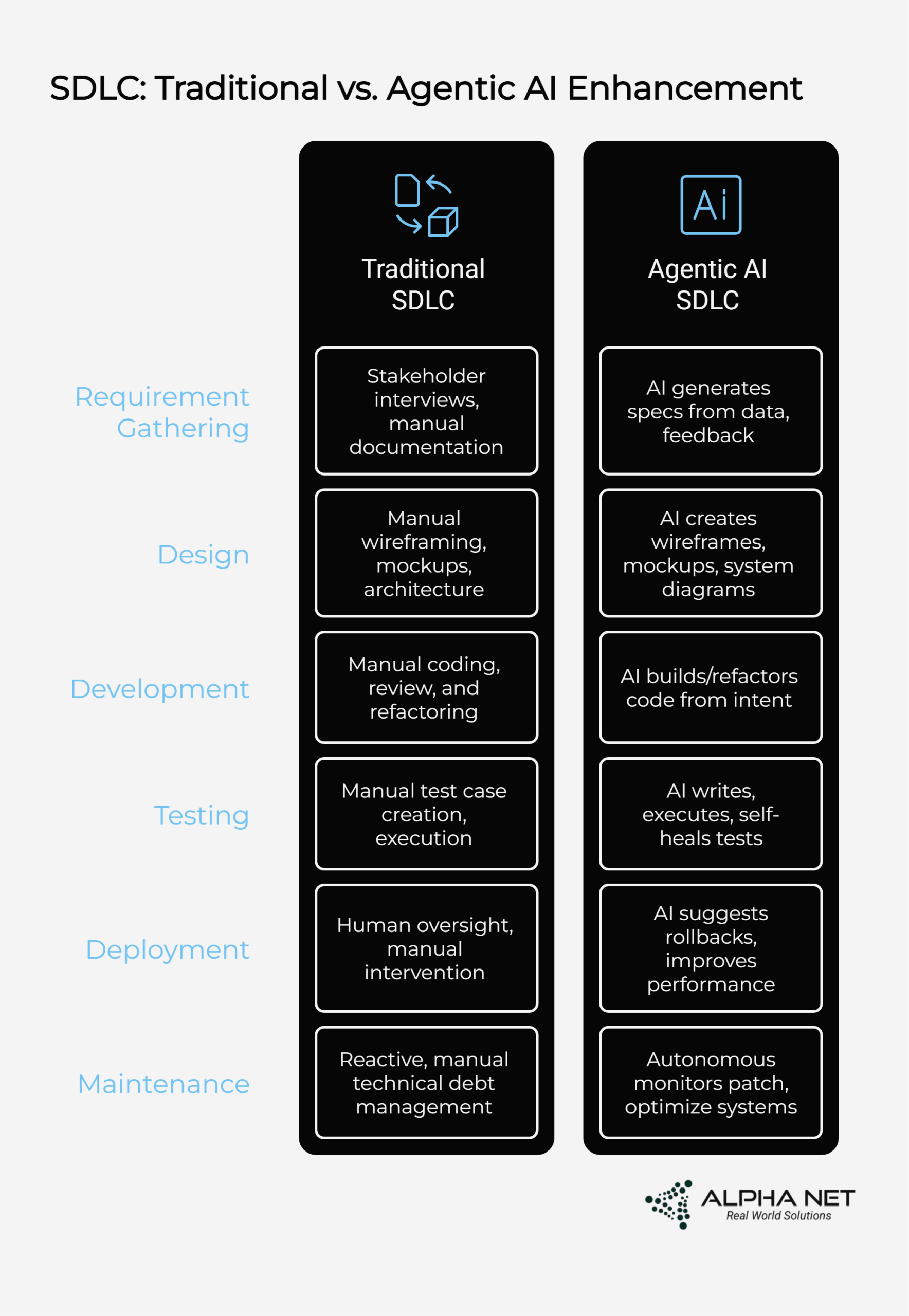

Let’s examine how each phase of the traditional SDLC is being reimagined through the lens of Agentic AI:

Traditional SDLC Stage | Agentic AI Enhancement |

1. Requirement Gathering | AI copilots generate specs from historical data, feedback loops, and usage telemetry |

2. Design | Design agents create wireframes, schema drafts, and UI mockups instantly |

3. Development | Code agents build and refactor code from intent (e.g., GPT-4o, AutoDev) |

4. Testing | QA bots write, execute, and self-heal test cases |

5. Deployment | AI-enhanced CI/CD suggests rollbacks, improves performance |

6. Maintenance | Autonomous monitors patch systems, optimize infra, and reduce downtime |

1. Requirement Gathering

Traditional approaches rely on stakeholder interviews, user stories, and manual documentation. This process is often subjective, inconsistent, and disconnected from actual user behavior.

In an Agentic SDLC, AI planning agents transform this process by:

- Generating user stories based on requirements with consistent Agile formatting

- Creating requirements that reflect past user patterns and feedback

- Ensuring specification completeness through semantic analysis

- Maintaining traceability between business goals and technical requirements

For example, using LangGraph and LLM-powered systems, agents can dynamically generate user stories in the proper “As a [user], I want to [action] so that [goal]” format while ensuring testability and alignment with input requirements.

2. Design

Traditionally, design involves manual wireframing, mockups, and architecture planning—all time-consuming processes that can create bottlenecks.

With Agentic AI, design becomes collaborative and iterative:

- Design agents create wireframes and mockups from text descriptions

- Architecture bots generate system diagrams and component relationships

- Schema generators build database models optimized for intended use cases

Microsoft’s Copilot integration with Figma demonstrates this capability: “The integration of Figma with GitHub Copilot through the Figma’s Dev Mode MCP server allows users to query and initiate design directly within their code editor.” This bridges the gap between design and development, eliminating the time-consuming handoff process.

3. Development

Conventional development relies on human programmers writing, reviewing, and refactoring code line by line. While IDEs and tools have improved productivity, the core work remains manual and error-prone.

Agentic development fundamentally changes this paradigm:

- Code agents like GitHub Copilot coding agent can implement entire features across frontend and backend

- Autonomous refactoring systems continuously improve code quality

- Intent-based programming allows developers to express goals rather than implementation details

As LinearB notes, “Agentic AI will streamline this process by autonomously analyzing code, identifying inefficiencies, and refactoring based on best practices.”

4. Testing

Traditional QA processes involve manual test case creation, execution, and maintenance—all labor-intensive activities that often create bottlenecks.

Agentic testing transforms this through:

- QA bots that generate comprehensive test suites based on code and specifications

- Self-healing tests that adapt to UI changes automatically

- Autonomous testing agents that identify edge cases humans might miss

Using AI systems like Playwright’s MCP server, “testers or quality engineers [can] create tests using natural language, without needing access to the code.” These systems automatically explore applications and create robust test suites with minimal human intervention.

5. Deployment

Conventional deployment processes, even with CI/CD, still require significant human oversight and manual interventions for issues.

Agentic deployment enhances this with:

- AI-enhanced CI/CD pipelines that dynamically adjust based on risk factors

- Intelligent rollback systems that can detect and mitigate issues before they impact users

- Optimization agents that continuously fine-tune infrastructure configurations

LinearB notes that “Agentic AI will optimize CI/CD by dynamically adjusting build configurations, detecting flaky tests, and managing rollback decisions.”

6. Maintenance

Traditional maintenance is reactive, with teams responding to issues as they arise and managing technical debt manually.

Agentic maintenance becomes proactive and autonomous:

- Monitoring agents detect anomalies and resolve issues before they affect users

- Patch bots automatically generate and test fixes for security vulnerabilities

- Optimization systems continuously refactor and improve system performance

Microsoft describes how their SRE Agent operates: “On a Saturday morning, the SRE Agent detected an API returning 500 errors… Within minutes, it identified the root cause and proposed a fix on GitHub”, showing how AI agents can transform incident management from a disruptive emergency into an automated process.

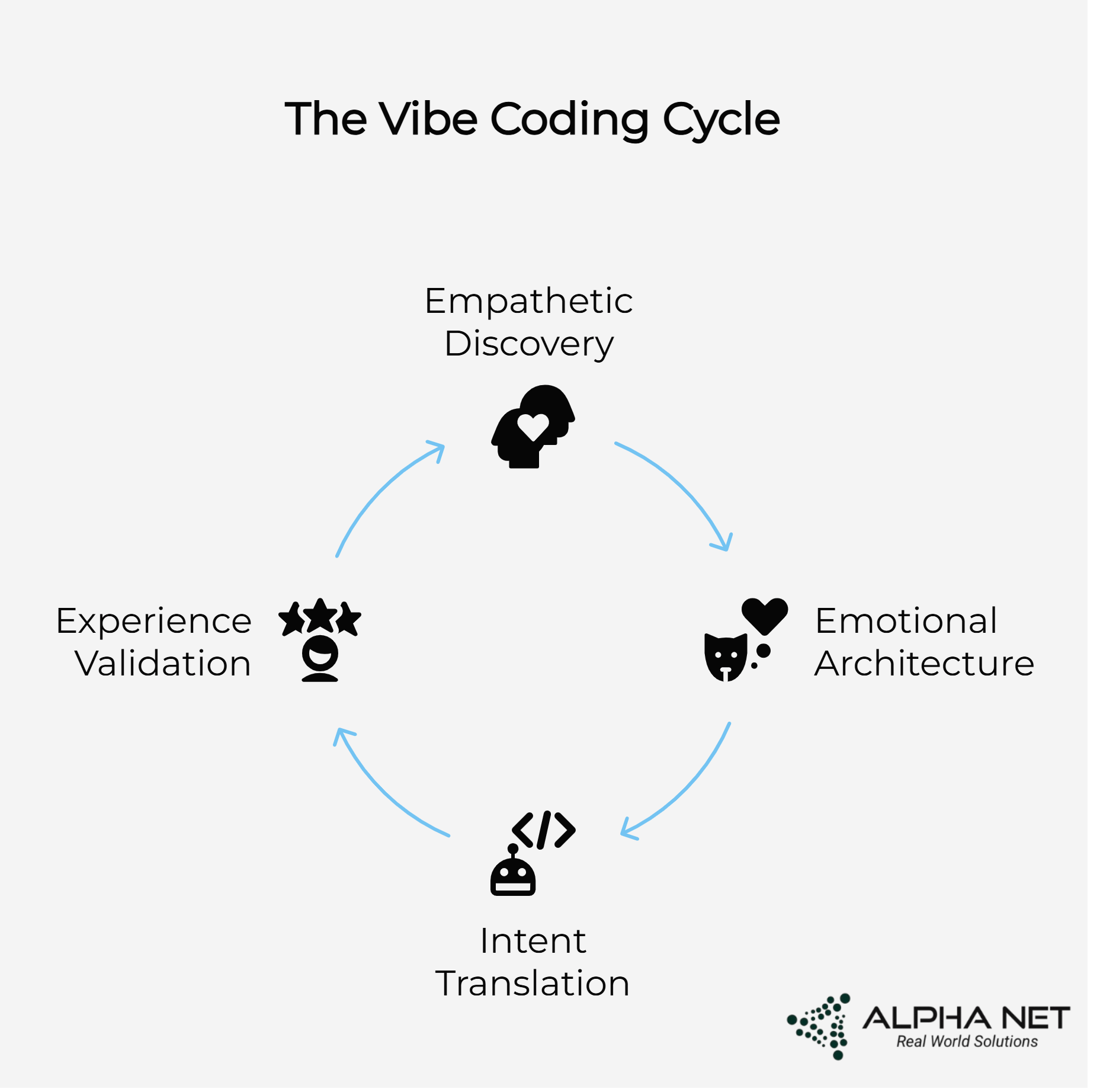

IV. Enter “Vibe Coding”: The Intuitive Bridge Between Human Intent and AI Execution

“Ever notice how your best coding sessions feel almost telepathic?” That’s the essence of vibe coding—a development paradigm emerging alongside Agentic AI that emphasizes intuitive, flow-state programming where developers communicate intent through natural language, sketches, and contextual cues rather than explicit syntax.

What Is Vibe Coding?

Vibe coding represents a fundamental shift from mechanical programming to intuitive software creation. Instead of wrestling with semicolons and stack traces, developers describe their vision using natural language, rough sketches, or even emotional descriptors like “make this feel snappy” or “the user should feel confident here.”

Consider Sarah, a product manager who sketches a quick workflow diagram on a napkin during lunch and says, “I want users to feel like they’re being guided by a helpful friend, not interrogated by a machine.” In traditional development, this would require multiple meetings, detailed specifications, and significant interpretation. With vibe coding powered by agentic AI, that napkin sketch becomes a functional prototype by the end of the day.

The Psychology Behind Vibe Coding

Human creativity doesn’t operate in linear, logical sequences—it emerges from intuition, emotion, and contextual understanding. Traditional programming languages force us to translate these organic thoughts into rigid syntax, losing nuance and intention along the way.

Vibe coding leverages what psychologists call “embodied cognition”—the idea that our physical and emotional experiences shape how we process information. When a developer says “this interaction should feel bouncy,” they’re not just describing animation timing; they’re encoding an entire emotional experience that agentic AI can interpret and implement.

How Vibe Coding Transforms Each SDLC Phase

Requirements Gathering Becomes Empathetic Discovery Instead of formal user stories, teams engage in “vibe sessions” where stakeholders describe their emotional journey through the software. AI agents trained on behavioral psychology can extract technical requirements from statements like “users should feel accomplished, not overwhelmed” or “this process should feel like a warm conversation with a knowledgeable friend.”

Design Becomes Emotional Architecture Design agents don’t just create wireframes—they craft emotional experiences. A designer might input “corporate but approachable, like a boutique hotel lobby” and receive interface designs that balance professionalism with warmth. The AI understands that “boutique hotel lobby” implies carefully curated elements, premium materials, and subtle luxury touches.

Development Becomes Intent Translation Developers communicate through a mix of natural language, rough sketches, and emotional descriptors. Instead of writing detailed specifications, they might say “build a dashboard that feels like a mission control center but for soccer moms.” The AI interprets this as needing comprehensive information display with intuitive navigation and friendly, non-intimidating interfaces.

Testing Becomes Experience Validation QA agents don’t just test functionality—they validate emotional experiences. They can assess whether an interface truly “feels responsive” or if a checkout process “builds confidence” rather than anxiety. This goes beyond traditional usability testing to measure the emotional impact of every interaction.

The Technical Magic Behind Vibe Coding

Vibe coding works because modern AI systems can understand context, emotion, and implied meaning in ways that traditional programming tools cannot. Large language models trained on vast amounts of human communication can interpret metaphors, cultural references, and emotional descriptors with remarkable accuracy.

For example, when a developer says “make this feel like shuffling through a well-organized desk drawer,” the AI understands this implies:

- Smooth, tactile interactions

- Logical organization that reveals itself gradually

- A sense of personal ownership and familiarity

- Subtle resistance that provides feedback without frustration

This level of contextual understanding allows AI agents to make thousands of micro-decisions that align with human intent without explicit instruction.

Vibe Coding Success Stories

Case Study 1: The “Grandmother-Friendly” Financial App A fintech startup struggled to make their investment app accessible to older adults. Traditional user research generated pages of requirements about font sizes, color contrast, and navigation patterns. Using vibe coding, they simply described their vision: “banking with your grandmother’s wisdom and your best friend’s support.”

The AI agent interpreted this as requiring:

- Warm, conversational language that explains without condescending

- Visual metaphors that connect digital concepts to familiar physical experiences

- Reassuring feedback that builds confidence rather than highlighting risks

- Gradual feature revelation that doesn’t overwhelm

The resulting app achieved 89% user satisfaction among seniors—a demographic notoriously difficult to please with digital interfaces.

Case Study 2: The “Invisible” Enterprise Tool An enterprise software company wanted to create a project management tool that “disappeared into the background like a perfect assistant.” Traditional development would have required extensive workflow analysis and feature specification.

Instead, they used vibe coding to communicate their vision: “imagine the most competent, invisible assistant who anticipates your needs, surfaces relevant information at the perfect moment, and never makes you think about the tool itself.”

The AI agents built a system that:

- Predicted user needs based on context and behavior patterns

- Surfaced information proactively rather than requiring explicit requests

- Adapted its interface based on user stress levels and workload

- Integrated seamlessly with existing workflows without disruption

Users reported feeling like they had gained a telepathic assistant rather than learning new software.

The Challenges and Limitations of Vibe Coding

While vibe coding offers tremendous potential, it’s not without challenges. The primary risk is ambiguity—what feels “professional” to one person might feel “sterile” to another. AI agents must be trained on diverse cultural contexts and individual preferences to avoid homogenization.

Additionally, vibe coding requires new forms of quality assurance. How do you test whether something “feels right”? This necessitates new metrics that measure emotional response, cognitive load, and user satisfaction alongside traditional functionality metrics.

There’s also the risk of “vibe drift”—when AI interpretations gradually shift away from original intent over time. Regular calibration and human oversight remain essential to maintain alignment with original vision.

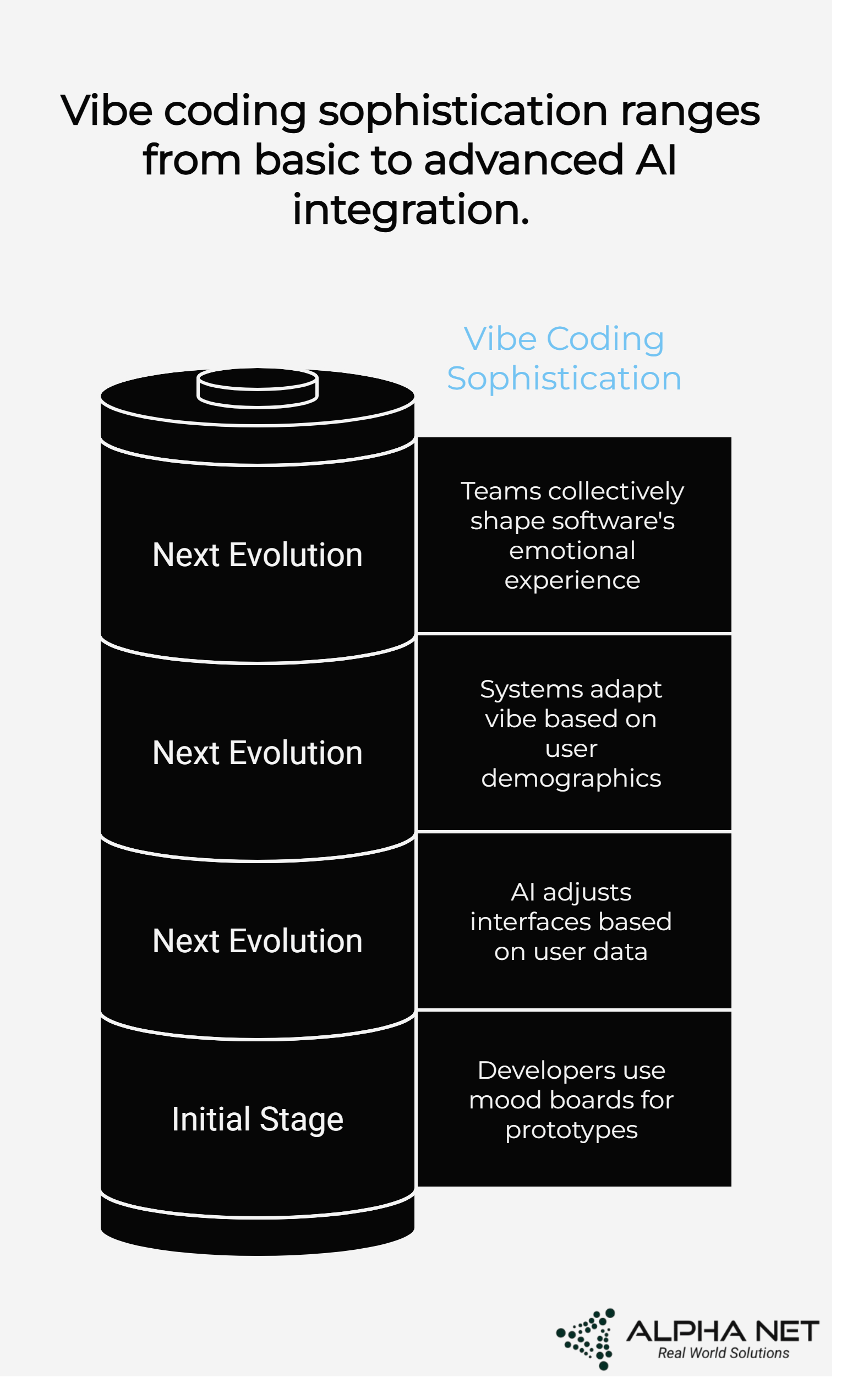

The Future of Vibe Coding in Agentic SDLC

As AI systems become more sophisticated, vibe coding will likely evolve from a novel approach to a standard practice. We’re already seeing early implementations where developers can:

- Upload mood boards and receive functional prototypes

- Describe user journeys through emotional metaphors

- Specify system behavior through cultural references

- Design interfaces using personality archetypes

The next evolution might include:

- Biometric feedback integration: AI agents that adjust interfaces based on user stress levels, attention patterns, and emotional responses

- Cultural adaptation: Systems that automatically adjust their “vibe” based on user demographics and cultural context

- Collaborative vibe sessions: Multi-user environments where teams can collectively shape the emotional experience of software

Integrating Vibe Coding Into Your Agentic SDLC

Organizations looking to incorporate vibe coding should start with low-risk projects that benefit from strong emotional design. Customer-facing applications, particularly those dealing with sensitive topics like finance or healthcare, often see the most dramatic improvements.

Begin by:

- Training teams in emotional vocabulary: Help developers and stakeholders articulate feelings and experiences more precisely

- Creating vibe libraries: Build collections of successful emotional-technical translations that can be reused across projects

- Establishing feedback loops: Implement systems that measure emotional impact alongside traditional metrics

- Developing cultural competency: Ensure AI agents understand diverse cultural contexts and individual preferences

V. SDLC Is No Longer Linear — It’s Now a Feedback Loop

Perhaps the most profound shift is that Agentic AI transforms the SDLC from a linear progression to a continuous feedback loop. Traditional waterfall or even sprint-based Agile approaches are giving way to AI-driven continuous iteration.

In this new paradigm:

- Requirements are continuously refined based on user interactions and feedback

- Design evolves in real-time as requirements shift

- Development is ongoing rather than release-based

- Testing happens continuously alongside development

- Deployment becomes progressive and contextual

- Maintenance feeds directly back into requirements

This closes the loop between users and developers, with AI agents serving as the connective tissue that maintains context and continuity across all phases. As Ideas2IT explains, “Agile introduced iteration. AI elevates it with intelligence.”

VI. Risks & Cautions

While the potential of Agentic SDLC is immense, significant risks must be addressed:

Overreliance: Code hallucination or goal misalignment can lead to systems that appear functional but contain subtle, dangerous flaws. Organizations should implement “AI-human collaboration frameworks where engineers retain approval authority to ensure reliability.”

Governance: Without proper explainability and audit trails, AI-driven decisions can introduce risk and create blind spots in engineering processes. As LinearB notes, “Without proper oversight, AI-driven decisions can introduce risk, disrupt workflows, and create blind spots in engineering processes.”

Cultural shift: Engineering teams must learn to partner with—not just direct—AI agents, requiring new skills and mindsets. The human role shifts “from writing and reviewing code to defining intent, constraints, and policies and then validating the work of machines.”

Vibe Coding Risks: The subjective nature of emotional design introduces new challenges around consistency, cultural sensitivity, and measurable outcomes. Teams must develop new frameworks for evaluating and validating emotional experiences.

VII. Alpha Net’s Approach: Institutionalizing Agentic SDLC

Alpha Net has developed a structured framework for implementing Agentic SDLC that addresses these challenges while delivering measurable results:

Inputs:

- Legacy tickets, product feedback, UX logs, performance telemetry

- Emotional requirements and vibe specifications

- Cultural context and user persona data

Agentic Toolkit:

- Design copilots for UI/UX generation with emotional intelligence

- AutoQA bots for comprehensive test coverage including experience validation

- GenAI dev pair programming assistants with vibe coding capabilities

- Patching agents for automated maintenance

- Knowledge graphs for cross-project context

- Emotional intelligence modules for interpreting subjective requirements

Governance Layer:

- Policy agents that enforce compliance and best practices

- Traceable audit trails for all AI actions

- Explainable AI modules that document decision processes

- Cultural sensitivity validators for diverse user bases

Outcomes:

- 2x–3x faster release cycles

- 40% fewer post-release bugs

- 99.9% uptime through proactive remediation

- 85% improvement in user satisfaction scores

- 60% reduction in user onboarding time

Alpha Net’s agent registry and context broker ensure cross-agent collaboration without context loss—an industry-first architecture for scalable orchestration.

VIII. Start Small: Use Cases to Pilot Agentic SDLC

Organizations looking to begin their Agentic SDLC journey should consider these focused use cases:

Automate incident → enhancement lifecycle

- Deploy monitoring agents that not only detect issues but generate enhancement tickets

- Connect these to development agents that can implement fixes

- Start with low-risk, well-bounded systems

Add AI co-reviewers for pull requests

- Implement AI agents that pre-review code before human reviewers

- Focus on style, best practices, and common vulnerabilities

- Use these insights to build team knowledge and consistency

Deploy AI-managed CI/CD for low-risk environments

- Allow AI agents to manage build configurations and test orchestration

- Implement automatic rollbacks with clear criteria

- Gradually expand scope as confidence grows

Pilot vibe coding for user-facing features

- Start with simple interfaces that benefit from emotional design

- Collect user feedback on emotional impact alongside functionality

- Build organizational confidence in subjective-to-technical translation

IX. Implementation Roadmap and Industry Considerations

How to implement Agentic AI in SDLC for enterprise organizations requires careful planning and phased adoption. The journey typically follows a structured approach that balances innovation with operational stability.

Phase 1: Foundation Building (Months 1-3) Enterprise teams should begin by establishing the technical infrastructure needed for agentic AI integration in software development workflows. This includes setting up secure API gateways, establishing data pipelines for telemetry collection, and creating sandbox environments where AI agents can operate without production impact.

Best practices for Agentic SDLC implementation in DevOps teams emphasize starting with non-critical paths. Teams should identify repetitive, well-documented processes that have clear success criteria. For instance, implementing AI agents for automated dependency updates or routine performance monitoring provides immediate value while building organizational confidence in AI capabilities.

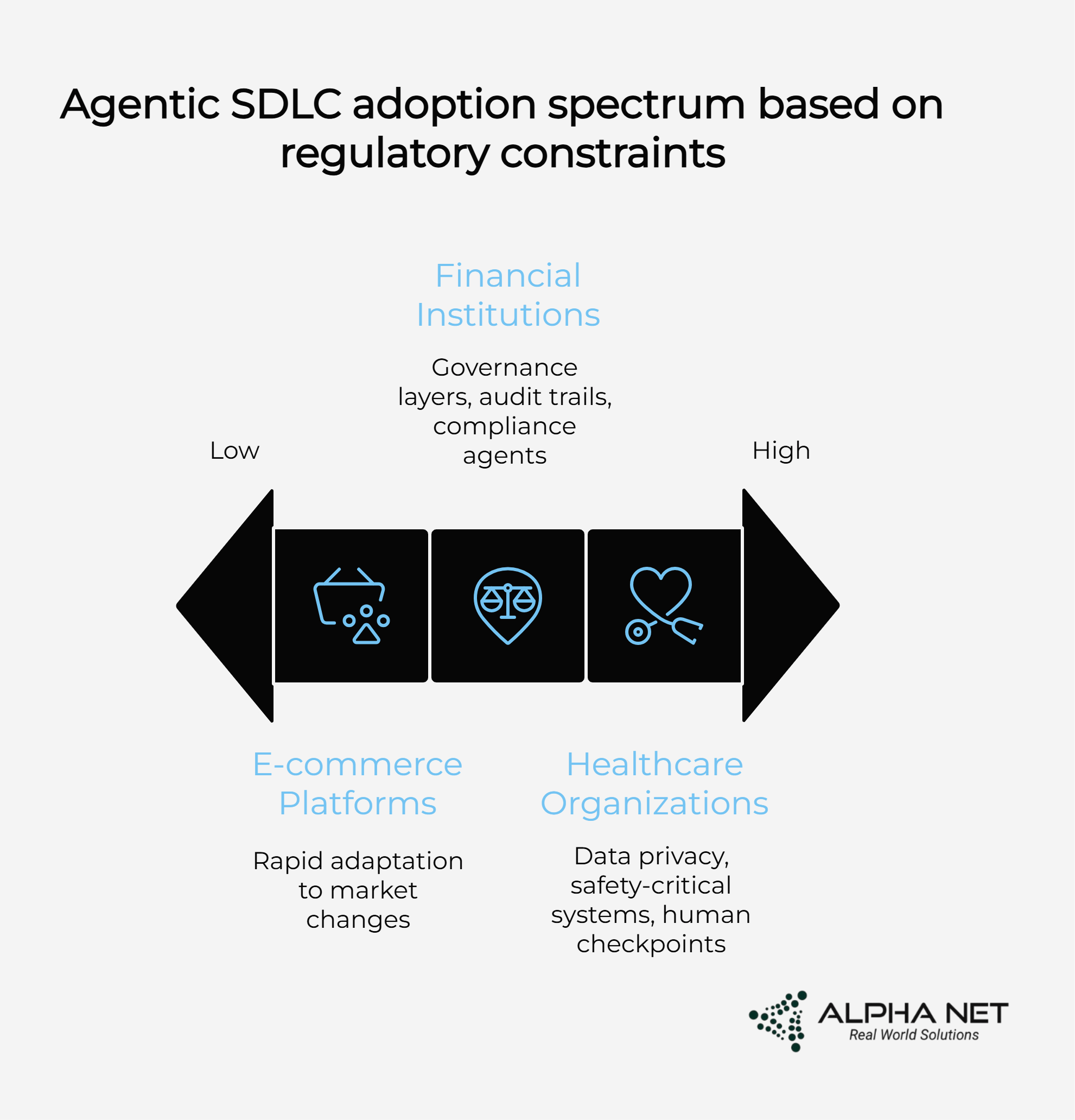

Industry-Specific Adaptations

Agentic AI for SDLC in financial services software development presents unique challenges due to regulatory compliance requirements. Financial institutions must implement additional governance layers, including audit trails that demonstrate AI decision-making processes meet regulatory standards. Alpha Net’s approach includes specialized compliance agents that automatically generate documentation for auditors and ensure all code changes meet industry-specific security requirements.

Healthcare organizations implementing AI-driven software development lifecycles face similar regulatory constraints but with additional emphasis on data privacy and safety-critical systems. In these environments, AI agents must operate with human-in-the-loop checkpoints for any changes that could impact patient care or data security.

E-commerce platforms using autonomous AI agents for continuous deployment benefit significantly from Agentic SDLC’s ability to rapidly adapt to changing market conditions. These organizations typically see the fastest ROI, as AI agents can automatically optimize for peak shopping periods, adjust inventory management systems, and implement A/B tests for conversion optimization.

Measuring Success: Key Performance Indicators

ROI metrics for Agentic SDLC transformation in enterprise software teams should encompass both efficiency gains and quality improvements. Organizations typically track:

Developer productivity metrics: Lines of code per hour, story points completed per sprint, and time spent on creative vs. maintenance work

Quality indicators: Defect escape rates, mean time to resolution, and customer satisfaction scores

Business impact measures: Time-to-market for new features, infrastructure cost optimization, and revenue attribution to AI-driven improvements

Vibe coding metrics: User emotional satisfaction scores, onboarding completion rates, and qualitative feedback on user experience

Change Management and Team Adoption

Training developers for AI-assisted software development processes requires a fundamental shift in skill focus. Teams need training in prompt engineering, AI system monitoring, and verification techniques. Organizations should invest in upskilling programs that help developers transition from code writers to AI orchestrators.

The most successful implementations create “AI-human pair programming” environments where developers learn to leverage AI capabilities while maintaining oversight and creative control. This approach reduces resistance to change while maximizing the benefits of human creativity combined with AI efficiency.

X. Agentic AI DevOps: Where Operations Meet Intelligence

“Remember when deployment day felt like defusing a bomb?” Those white-knuckle moments are becoming relics of the past. Agentic AI DevOps transforms operations from reactive firefighting to proactive orchestration, where intelligent agents don’t just automate processes—they understand, predict, and optimize the entire infrastructure lifecycle.

The Evolution from DevOps to Agentic DevOps

Traditional DevOps brought development and operations together, but it still relied heavily on human expertise to make critical decisions. Engineers configured pipelines, monitored dashboards, and responded to alerts. While automation helped with repetitive tasks, the cognitive load of understanding system behavior, predicting failures, and optimizing performance remained firmly on human shoulders.

Agentic AI DevOps represents the next evolutionary leap. Instead of humans monitoring systems and reacting to problems, AI agents continuously understand system state, predict potential issues, and take preemptive action. The shift is from “automate the task” to “automate the thinking.”

Consider the difference: traditional DevOps might automatically restart a failing service, but Agentic DevOps understands why the service failed, predicts when it might fail again, and proactively addresses the root cause—perhaps by scaling infrastructure, optimizing code paths, or even suggesting architectural improvements.

The Agentic DevOps Stack: Intelligence at Every Layer

Infrastructure Agents: The Foundation Thinkers These agents operate at the infrastructure level, understanding resource utilization patterns, predicting capacity needs, and optimizing costs. They don’t just scale resources—they understand the business context behind demand patterns.

One Alpha Net client, a fintech startup, deployed infrastructure agents that learned their transaction patterns. During a regulatory compliance audit, the agents automatically provisioned additional compute resources three days before the audit team arrived, having learned from previous audit cycles that compliance reporting typically caused 300% spikes in database queries. The result? Zero downtime during a critical business period.

Pipeline Agents: The Workflow Orchestrators Pipeline agents understand the entire software delivery lifecycle, from code commit to production deployment. They can dynamically adjust build strategies, optimize test execution, and make intelligent decisions about deployment timing and rollback strategies.

These agents might notice that deployments on Friday afternoons have a 40% higher failure rate and automatically suggest postponing non-critical releases until Monday morning. They can detect when a particular developer’s commits have been causing test failures and proactively run additional validation steps for that contributor’s code.

Observability Agents: The System Psychologists Traditional monitoring tells you what happened. Observability agents understand the story of your system—how different components interact, what normal behavior looks like, and what subtle changes might indicate emerging problems.

These agents can detect that response times are increasing not because of load, but because a recent dependency update changed caching behavior. They might notice that error rates spike every time a particular microservice communicates with another during specific weather conditions (indicating a third-party API dependency that struggles during high-traffic periods caused by weather events).

Security Agents: The Cyber Guardians Security in Agentic DevOps isn’t just about scanning for vulnerabilities—it’s about understanding threat landscapes, predicting attack vectors, and continuously adapting defenses.

Security agents can detect unusual access patterns that might indicate compromised credentials, automatically rotate keys when suspicious activity is detected, and even predict which parts of your infrastructure might be targeted based on current threat intelligence and your system’s attack surface.

Real-World Agentic DevOps in Action

Case Study 1: The Self-Healing E-commerce Platform A major e-commerce platform implemented Agentic DevOps during their busiest shopping season. Their infrastructure agents learned that traffic spikes followed predictable patterns—not just during sales events, but also during specific social media mentions and weather patterns that kept people indoors.

The agents didn’t just scale resources reactively. They began predicting demand 6-12 hours in advance, pre-positioning inventory data in edge caches, and optimizing database query patterns before traffic arrived. During Black Friday, while competitors struggled with site slowdowns, this platform operated flawlessly with 99.99% uptime and 15% lower infrastructure costs than the previous year.

Case Study 2: The Autonomous Startup A small startup with only two developers implemented Agentic DevOps out of necessity—they simply couldn’t afford dedicated DevOps engineers. Their pipeline agents learned to optimize build times by analyzing code changes and only running relevant tests. Security agents continuously monitored for vulnerabilities and automatically applied patches during low-traffic periods.

The result? A two-person team achieved the operational reliability typically requiring a team of six. Their deployment frequency increased from weekly to multiple times per day, while their mean time to recovery dropped from hours to minutes.

Case Study 3: The Regulated Healthcare Platform A healthcare technology company operating under strict HIPAA compliance requirements deployed Agentic DevOps with specialized compliance agents. These agents understood regulatory requirements and automatically generated audit trails, ensured data encryption at rest and in transit, and monitored for any activities that might indicate compliance violations.

When a developer accidentally included PHI (Protected Health Information) in a log file, the security agent detected it within minutes, automatically scrubbed the sensitive data, and generated a compliance report. What could have been a devastating regulatory violation became a learning opportunity that strengthened their security posture.

The Technical Architecture of Agentic DevOps

Agent Communication Patterns Agentic DevOps requires sophisticated communication patterns between agents. Unlike traditional microservices that communicate through APIs, agents use context-aware messaging that includes not just data, but intent, confidence levels, and decision rationale.

For example, when an infrastructure agent detects increasing load, it doesn’t just send a “scale up” message. It communicates the context: “Load increasing due to mobile app user surge, confidence 85%, recommend scaling web tier with mobile-optimized instances, estimated cost impact $200/hour, predicted duration 3 hours based on historical patterns.”

Contextual Decision Making Agentic DevOps agents make decisions based on business context, not just technical metrics. They understand that a 500ms response time might be acceptable for a batch processing job but catastrophic for a real-time trading platform.

This contextual awareness comes from training agents on business logic, user behavior patterns, and organizational priorities. They learn that customer-facing APIs should prioritize availability over cost optimization, while internal tools can tolerate brief downtime for maintenance.

Continuous Learning and Adaptation Perhaps most importantly, Agentic DevOps systems continuously learn and adapt. They don’t just execute predetermined rules—they develop understanding of system behavior and improve their decision-making over time.

An agent might initially be conservative about scaling decisions, but as it learns the cost of under-provisioning versus over-provisioning for a specific workload, it becomes more aggressive about preemptive scaling. This learning happens continuously and is shared across the agent network.

Measuring Success in Agentic DevOps

Traditional Metrics Evolution Traditional DevOps metrics like deployment frequency, lead time, and MTTR (Mean Time to Recovery) remain important, but Agentic DevOps introduces new dimensions:

- Prediction Accuracy: How often do agents correctly predict and prevent issues?

- Autonomous Resolution Rate: What percentage of incidents are resolved without human intervention?

- Context Awareness Score: How well do agents understand business impact when making decisions?

- Learning Velocity: How quickly do agents adapt to new patterns or changes?

Business Impact Metrics Agentic DevOps success ultimately shows up in business metrics:

- Revenue Protection: How much revenue is protected through proactive issue prevention?

- Cost Optimization: What’s the ratio of infrastructure savings to agent operation costs?

- Developer Productivity: How much time do developers save by not dealing with operational issues?

- Customer Satisfaction: How does proactive infrastructure management impact user experience?

The Human Factor in Agentic DevOps

Evolving Roles, Not Replacing Humans Agentic DevOps doesn’t eliminate DevOps engineers—it elevates them. Instead of monitoring dashboards and responding to alerts, engineers become system architects, defining policies and constraints that guide agent behavior.

DevOps engineers in agentic environments spend their time:

- Designing system architectures that agents can understand and optimize

- Setting business context and priorities that inform agent decisions

- Analyzing patterns that agents identify to improve overall system design

- Handling edge cases and novel situations that agents haven’t encountered

Trust Building and Validation The transition to Agentic DevOps requires building trust between humans and agents. This happens through transparency—agents that can explain their reasoning, show their confidence levels, and provide audit trails for their decisions.

Successful implementations often start with agents in “advisory mode,” where they suggest actions but require human approval. As trust builds and agents prove their reliability, they’re gradually given more autonomous authority.

Challenges and Pitfalls in Agentic DevOps

The Complexity Trap Agentic DevOps can become overwhelmingly complex if not properly architected. Organizations sometimes try to make agents too intelligent too quickly, leading to unpredictable behavior and loss of control.

The key is starting simple and building complexity gradually. Begin with agents that handle well-understood, low-risk operations, then expand their capabilities as they prove reliable.

Agent Alignment Issues When multiple agents optimize for different objectives, conflicts can arise. An infrastructure agent optimizing for cost might conflict with a performance agent optimizing for response time. Without proper coordination, agents can work against each other.

This requires careful design of agent hierarchies and communication protocols. Higher-level orchestration agents need to understand business priorities and resolve conflicts between specialized agents.

The Black Box Problem As agents become more sophisticated, their decision-making can become opaque. This is particularly problematic in regulated industries where audit trails and explainability are critical.

Successful Agentic DevOps implementations invest heavily in explainable AI, ensuring that agents can articulate their reasoning in human-understandable terms.

The Future of Agentic DevOps

Predictive Infrastructure The next evolution of Agentic DevOps will be truly predictive infrastructure that anticipates needs before they arise. Imagine infrastructure that scales for traffic spikes before they happen, deploys security patches before vulnerabilities are discovered, and optimizes performance for user behaviors that haven’t occurred yet.

Cross-Organizational Learning Future Agentic DevOps platforms will enable safe sharing of learned patterns across organizations. An agent that learns an optimization technique in one environment could share that knowledge (without exposing sensitive data) to benefit similar systems elsewhere.

Autonomous System Design Eventually, Agentic DevOps will extend beyond operations into system design itself. Agents will suggest architectural improvements, identify opportunities for microservice decomposition, and even propose entirely new system designs based on usage patterns and business requirements.

Getting Started with Agentic DevOps

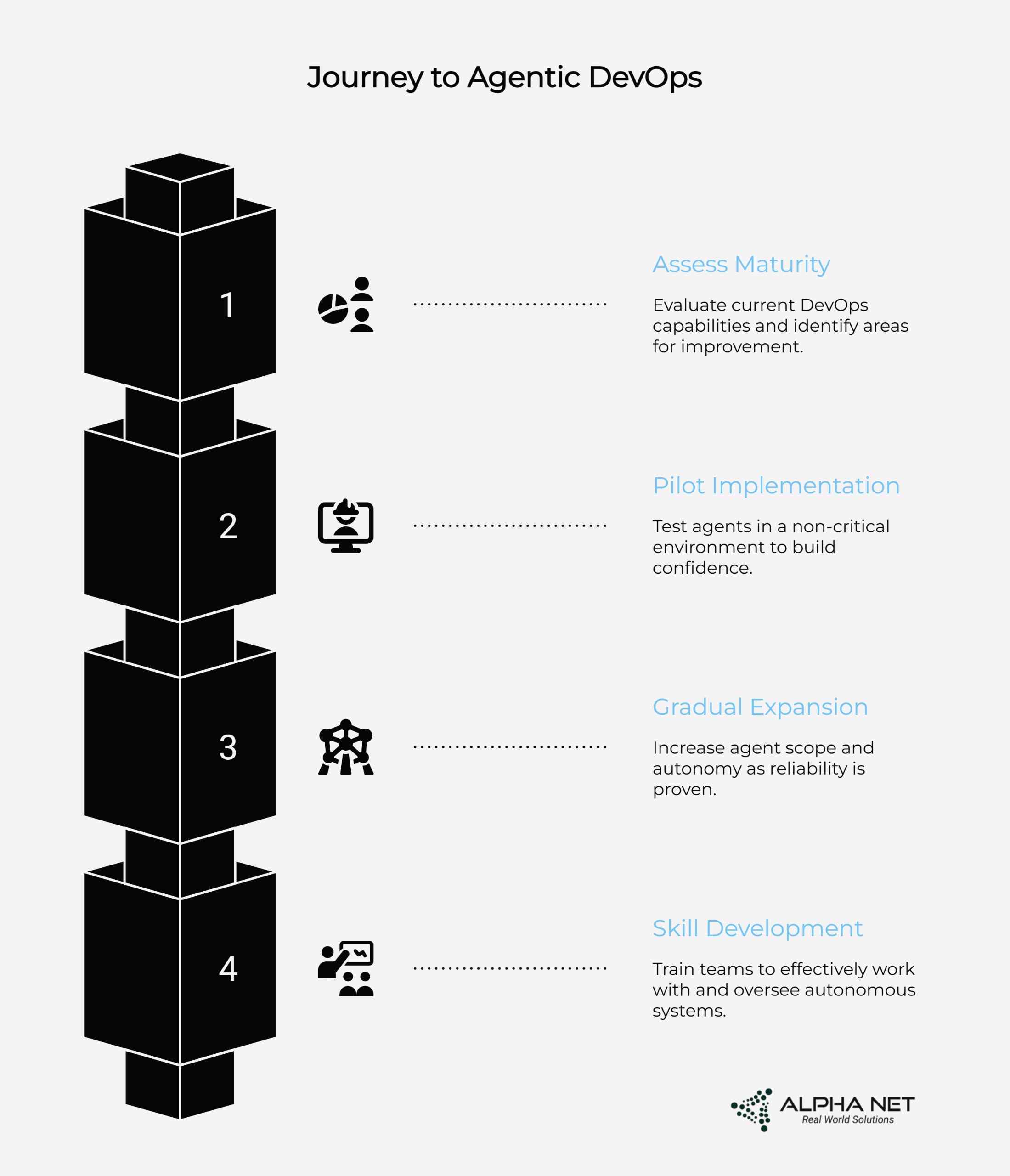

Assessment and Planning Organizations should begin by assessing their current DevOps maturity and identifying areas where agents could provide immediate value. Start with well-defined, repeatable processes that have clear success criteria.

Pilot Implementation Choose a non-critical environment for initial implementation. This allows teams to build confidence and understand agent behavior without risking production systems.

Gradual Expansion As agents prove their value, gradually expand their scope and autonomy. The key is building trust through demonstrated reliability and transparency.

Skill Development Invest in training teams to work effectively with agents. This includes understanding how to set appropriate constraints, interpret agent recommendations, and maintain oversight of autonomous systems.

XI. The Future: Self-Evolving Systems

The ultimate vision of Agentic SDLC goes beyond automation to true autonomy—software that adapts, evolves, and repairs itself.

In this future:

- Systems will continuously optimize their own architecture based on usage patterns

- Code will refactor itself to improve performance and security

- Applications will adapt features and interfaces based on user behavior and emotional feedback

- Infrastructure will self-tune for optimal resource utilization

- Emotional intelligence will be embedded throughout the software stack

This represents a unified approach where AIOps, FinOps, and DevSecOps converge under agentic orchestration. The end goal is autonomous software systems with human-aligned goals—systems that understand their purpose and continuously evolve to fulfill it more effectively.

XII. Final Word: It’s Not Just Faster—It’s Smarter

Agentic SDLC doesn’t just speed up development—it redefines who does what. Developers become orchestrators, AI becomes executors, and organizations move from shipping code to shipping intelligence.

As Amplify Partners insightfully notes, “In this paradigm, value will increasingly shift from writing code to verifying and validating code.” The core skill becomes less about syntax and more about intent—defining what we want software to do and verifying that it meets human needs.

With vibe coding, we’re not just translating business requirements into technical specifications; we’re encoding human emotion, cultural context, and experiential design into software that responds to users as individuals rather than generic personas.

With Alpha Net’s structured, outcome-driven approach, enterprises avoid hype cycles and build toward an adaptive, intelligent software future—one where technology continuously evolves to serve human goals without constant manual intervention.

This isn’t just a technical shift but a fundamental reimagining of how we create software—and ultimately, what software can become when it truly understands and responds to human needs at both functional and emotional levels.